The Great De-Platforming

Companies trip over themselves to ban hate speech. Took long enough.

Yesterday will be remembered (if we remember such things) as De-platforming Day.

For years, social platforms all took a very public, if not very wrong, stance that they are not the arbiters of truth. They are just conduits for people to exercise their voices, no matter how harmful or hateful.

On Monday, however, several platforms—Reddit, Twitch, YouTube—announced new policies they believe will help stem the flow of hate speech.

Researchers have mounds of data to show how the platforms serve as “echo chambers. This study from 2016 found that:

using a massive quantitative analysis of Facebook, we show that information related to distinct narratives––conspiracy theories and scientific news––generates homogeneous and polarized communities (i.e., echo chambers) having similar information consumption patterns.

And this section of a literature review of “mapping online hate” from the academic journal PLoS One sums it up:

Online hate spreading has also emerged as a tool for politically motivated bigotry, xenophobia, homophobia, and excessive nationalism [9–12]. An example can be seen in the 2016 US elections; the narrative of “Make America Great Again” has empirically been shown to have amplified the online presence of white supremacists [9]. Social media platforms have granted a new spirit to radical nationalist groups including Klansmen and Neo-Nazis by ensuring anonymity or pseudonymity (i.e., disguised identity), ease of discussions, and spread of radical ideologies [1].

Moreover, social media and online forums have provided hate-driven terrorist groups a medium for launching propaganda to radicalize youth globally [13]. These groups use images and Internet videos to communicate their hateful intent, to trigger panic, and to cause psychological harm to the general public [14]. As a prime example of cyberterrorism, the Islamic State of Iraq & Syria (ISIS) effectively used social media to recruit youngsters from Europe to participate in the Syrian conflict [12]. Their social media campaigns led to at least 750 British youngsters joining Jihadi groups in Syria [13]. Overall, these real-world phenomena highlight the very real negative impact of spreading online hate and suggest that online hate can be considered as a major public concern.

With this backdrop: the great de-platforming.

Reddit, the sixth-largest site on the internet, banned more than 2,000 subreddits, including its popular subreddit r/the_donald, a place where 800,000 people went to share memes, spread conspiracy theories, and generally abuse the site’s other populations, under the auspices of its new content policies.

(Another subreddit the company banned: r/ChapoTrapHouse, a leftist subreddit that, according to Reddit CEO Steve Huffman says, "consistently host rule-breaking content and their mods have demonstrated no intention of reining in their community.”)

Despite Monday's ban, r/The_Donald had been pretty quiet for months, its users having migrated to a clone site due to restrictions the platform had already placed on the subreddit for frequent rule violations, Axios reported.

Twitch, the streaming service owned by Trump nemesis and actual billionaire Jeff Bezos, put Trump’s account into the penalty box for violating its rules. Trump, who has about 125,000 followers, has partnership status, which allows him to monetize his feed.

According to The Verge:

The suspension arrives a week after Twitch swore it would crack down on harassment within the community following reports of assault and harassment from streamers. It’s a sign that Twitch may be starting to take moderating streams a lot more seriously. The racist language it banned Trump for is often allowed on other platforms due to his role as a politician and president of the United States.

“Hateful conduct is not allowed on Twitch,” a Twitch spokeswoman said in a statement. “In line with our policies, President Trump’s channel has been issued a temporary suspension from Twitch for comments made on stream, and the offending content has been removed.”

YouTube on Monday announced it’s banning several white nationalist channels including those from Stefan Molyneux, David Duke, and Richard Spencer. This is a big deal; Molyneux, for instance, has more than 300 million video views.

In a statement to CNN, YouTube said:

"We have strict policies prohibiting hate speech on YouTube, and terminate any channel that repeatedly or egregiously violates those policies. After updating our guidelines to better address supremacist content, we saw a 5x spike in video removals and have terminated over 25,000 channels for violating our hate speech policies.”

YouTube said the channels repeatedly violated its rules by claiming that members of protected groups were innately inferior to others, as well as other violations.

These policies come weeks after Twitter started slapping warning notes on the President’s tweets that violate a Twitter policy and Snapchat saying it will stop promoting Trump’s account.

Indeed, a recent paper published in the Proceedings of the 2020 ACM CHI Conference on Human Factors in Computing Systems, found that, according to Science Daily (I couldn't’ access the original paper, just the abstract, so leaning on Science Daily here):

pairing headlines with credibility alerts from fact-checkers, the public, news media and even AI, can reduce peoples' intention to share. However, the effectiveness of these alerts varies with political orientation and gender. The good news for truth seekers? Official fact-checking sources are overwhelmingly trusted.

Axios reports today that Facebook:

will be updating the way news stories are ranked in its News Feed to prioritize original reporting, executives tell Axios. It will also demote stories that aren't transparent about who has written them.

The timing on all the platforms’ newfound moral compass is interesting for a few reasons, a couple near-term-thinking ones and maybe one long-term reason..

As advertisers pile on a month-long Facebook boycott predicated on the platform’s role of spreading hate speech, the other platforms are basically pulling an Abe Simpson walking into and then out of a brothel. Gonna pass on this one.

But also, let’s not ignore one of the bigger threads right now pushing conversation and action across a variety of industries: protests against systemic racism is putting pressure on every company. Silicon Valley is not immune, and as a predominately white industry (at the executive level) these companies find themselves in the cross-hairs of both advertisers and users. So doing something, like establishing these policies, is a start.

It’s also the end of Q2; as companies prepare for their quarterly earnings, which will likely be down due to the impact of the coranvirus, at least they’ll have something positive to say.

Finally, as Wired notes, perhaps they’re making a bet that Trump will lose in November. Maybe Silicon Valley is reading the polls that show Joe Biden with a big lead at the moment and is feeling emboldened that the bully in the White House won’t be able to retaliate—through “his” Department of Justice.

Trump is now a clear underdog in his reelection bid, lagging behind Joe Biden by double digits in some national polls and trailing badly in crucial swing states. Don’t think Silicon Valley hasn’t noticed. It suddenly appears they might not have to worry about what Trump thinks for much longer.

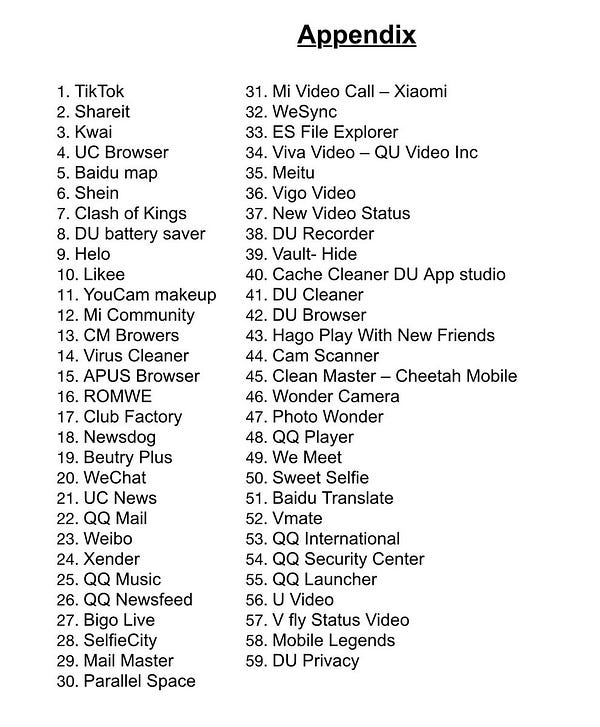

One other curious platform story from yesterday. India banned 59 Chinese apps, including TikTok.

Of TikTok’s 2 billion downloads, 611 million of them come from India. Losing more than 25 percent of your user base in one swoop can be crushing. We’ll see how this goes.

But here in America, we still get Kellyanne Conway’s daughter posting anti-Trump videos on TikTok.

Thank you for allowing me in your inbox. If you have tips, or thoughts on the newsletter, drop me a line! Or you can follow me on Twitter.

Jack Peñate, “Torn On The Platform”

Some interesting links:

Amazon’s brand value tops $400 billion, boosted by the coronavirus pandemic: Survey (CNBC)

Roberts Isn’t a Liberal. He’s a Perfectionist Who Wants to Win. (Slate)

The New York Times Pulls Out of Apple News (NYT)

Newsonomics: The next 48 hours could determine the fate of two of America’s largest newspaper chains (Nieman Lab)

Barstool Sports Founder Unapologetic About Using Racist Language in ‘Comedy’ Videos: ‘I’m Uncancellable’ (Variety)